# Imports

import numpy as np

import pandas as pd

import matplotlib.pyplot as pltIn this blog I am going to explain Regression, it’s type, and how can we code a Linear Regression from scratch in Python. First we will look at the concepts and maths behing this algorithm and then we will implement it using Python, NumPy and Pandas.

By the end of this blog you will bw able to understand:

- What is Regression and classification?

- Difference between Linear Regression and Non-Linear Regression.

- Hyothesis(model) of Linear Regression

- Cost function

- Gradient Descent

- How to code all these equation and algorithm in Python?

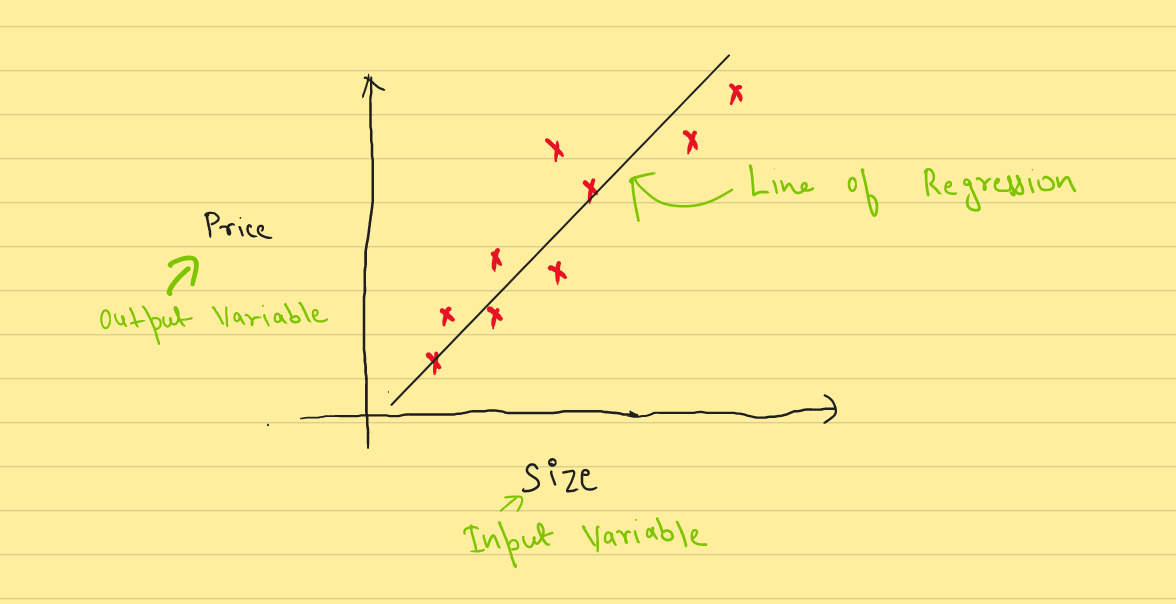

Regression vs Classification

In Machine Learning, If the output variable has continous range, and we have to find the relationship between the the input and output variable(s). This is called Regression. Examples include - House Price, Salary, etc

In contrast, if the the output has descrete range. It is then called Classification. Examples include - Cat vs Dog, Spam/Not Spam, etc

These regresssion models can be used for both Inference and Prediction.

In this blog we are focused to get Prediction using Regression.

Regression

Regression in itself can be of multiple types - Linear and Non-Linear Regression.

Linear Regression - When the model relates the input(independent) and output(dependent) varibale in straight line. Simple Linear Regression is subset of the prior, when there is only single input variable is present.

Non-Linear Regression - When the model relates the input and output varibale in curved line.

I am thowing a lot of jargons here so let me clarify few things before moving forward.

Input Variable/Independent Variable/Feature are used interchangibly. In ML context these are the values which we are going to have to make the Prediction. For example :- For House Price Prediction we need Size. Size is the Input/Feature.

Output Varibale/Dependent Varibale/Target are those values which we are need to predict. Price is the target in case of the last example.

Linear Regression

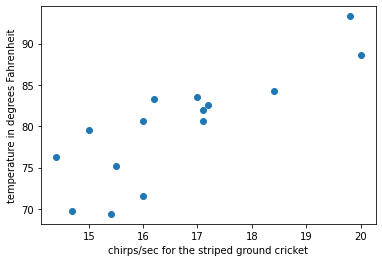

In this blog we are going to implement Simple Linear Regression on a small dataset. The information about the dataset is given below.

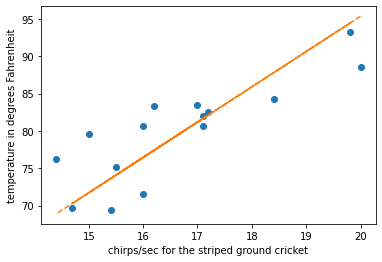

We are using a small dataset from here. This a data about Cricket Chirps Vs. Temperature. We will use linear regression to fit model.

# loading data

data = pd.read_excel('slr02.xls', engine='xlrd')*** No CODEPAGE record, no encoding_override: will use 'iso-8859-1'Now we look into data we see there are two columns X and Y, were

X = chirps/sec for the striped ground cricket

Y = temperature in degrees Fahrenheit

# visualise data

data.head()| X | Y | |

|---|---|---|

| 0 | 20.000000 | 88.599998 |

| 1 | 16.000000 | 71.599998 |

| 2 | 19.799999 | 93.300003 |

| 3 | 18.400000 | 84.300003 |

| 4 | 17.100000 | 80.599998 |

# data we got are in pandas dataframe format

# we need to cast it in numpy array for calulations

X = np.array(data.X)

Y = np.array(data.Y)# Now we have two arrays. One containing input features and other array has output features

# visualise casted data

print(X,Y)

print("Data points:", len(X))[20. 16. 19.79999924 18.39999962 17.10000038 15.5

14.69999981 17.10000038 15.39999962 16.20000076 15. 17.20000076

16. 17. 14.39999962] [88.59999847 71.59999847 93.30000305 84.30000305 80.59999847 75.19999695

69.69999695 82. 69.40000153 83.30000305 79.59999847 82.59999847

80.59999847 83.5 76.30000305]

Data points: 15# function for plotting data points

def plot_points(X, Y, xlabel, ylabel):

"""Plot points given X and Y co-ordinates and labels them"""

plt.plot(X, Y, 'o')

plt.xlabel(xlabel)

plt.ylabel(ylabel)This is the scatter-plot visualization of the data we have used. On X-axis we have chirps/sec and on the Y-axis we have Temperature.

# plot data points

plot_points(X, Y, "chirps/sec for the striped ground cricket", "temperature in degrees Fahrenheit")

Linear Regression hypothesis/model

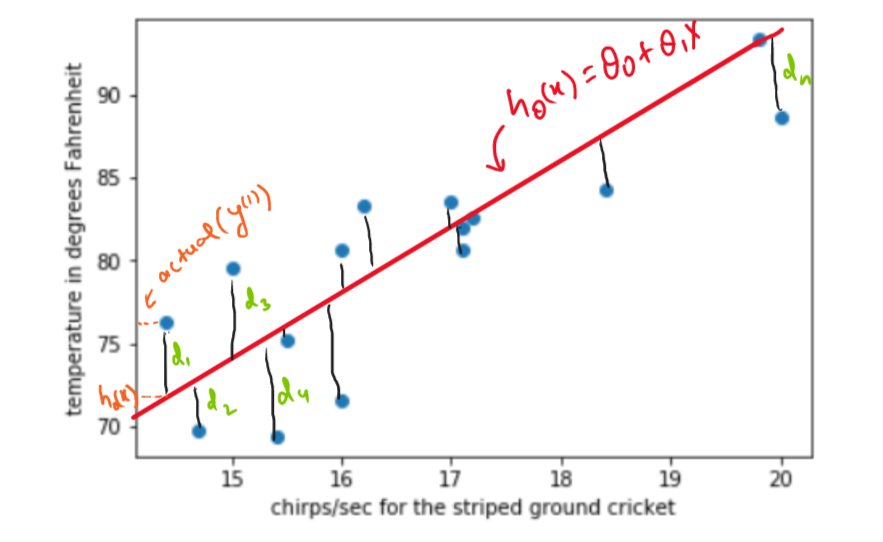

As mentioned earlier in the definition, Linear Regression is a way to find relationship between the input varibale (chirps/sec) and output varibale (temperature) with the help of a straight line.

Our main objective is to fit a straight line though these data points. As we can see the input and output are linearly dependent (as input varibale value increases/decrease, output variable value also tend to increase/decrease).

Objective : To find a best fit straight line through data points

Best fit implies that the distance between the points and line should be minimum.

In the figure, \(d_1,d_2,d_3,...., d_n\) represents the distance between the point and the line and our goal is to minimize the sum of these distances.

Hypothesis Function: A stright line can be represented like \(h_{\theta}(x)={\theta}_0 + {\theta}_1x\). Where \({\theta}_0\) is the intercept and \({\theta}_1\) is the slope of the line. We have to find this \({\theta}_0\) and \({\theta}_1\)

Before moving forward, These are some conventions we have taken for varibale names and mathematical equations.

\(m\) : number of training examples (m=15, in this case)

\(X\) : input / features

\(Y\) : output/ target

\(x^{(i)}, y^{(i)}\) : \(i^{th}\) training data.

\({\theta}_0\) ,\({\theta}_1\): Model Parameters / Weights

# initialised random thetas

np.random.seed(2)

theta = np.random.rand(2,1)

# hypothesis of model

def hypothesis(X, theta):

"""Predicts output feature given input feature and theta"""

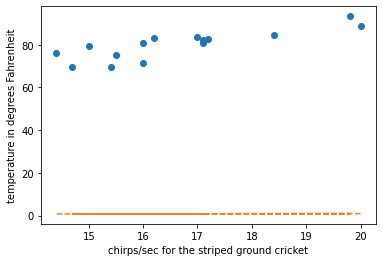

return theta[0] + theta[1] * XLet’s plot the initial line to check how ot fits our data.

# plots line of regression

def draw_line(theta, X):

"""Plot a line from slope and intercept"""

x_vals = X

y_vals = hypothesis(x_vals, theta)

plt.plot(x_vals, y_vals, '--')We want to plot data points and line of regession on same plot to see if we are progressing as we train our model

# plots points and lines

def draw_points_and_lines(X, Y, xlabel, ylabel, theta):

"""Draws lines and points"""

plot_points(X, Y, xlabel, ylabel)

draw_line(theta, X)Now without training our model let’s were the line of regression lies

# draw line of regression without traing model

draw_points_and_lines(X, Y, "chirps/sec for the striped ground cricket", "temperature in degrees Fahrenheit", theta)

Cost Function

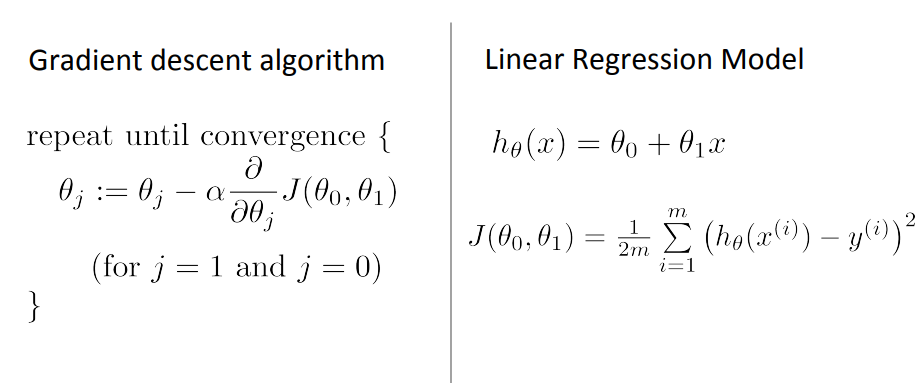

Cost function givies us measure of how much we are error the hypothesis is making? These errors are measured as mean of squared error( \(d_1,d_2,d_3,...., d_n\)) terms.

We will be using squared error cost function which is formulated as,

\(d_{i} = h_{\theta}(x^{(i)}) - y^{(i)}\)(error)

errors can be both postive and negetive in direction so, we sqaure this error term to make all the error terms positive(+ve).

\((d_{i})^2 = (h_{\theta}(x^{(i)}) - y^{(i)})^2\)(squared error)

We need to the line which minimizes the mean of these squared error terms.

Cost function - (Mean Sqaured Error) \[J({{\theta}_0, {\theta}_1}) = \frac{1}{2m}\sum_{i=0}^{i=n-1}(h_{\theta}(x^{(i)}) - y^{(i)})^2\]

Note:- Mean Squared Error is divided by 2 beacuse it will later help when finding gradient of the function

We need to minimize this Cost function with respect to \({\theta}_0, {\theta}_1\). After optimization, it will give us the value of parameters(thetas) which in turn will give us the best fit line.

# cost function

m = len(X)

def cost(X, Y, theta):

"""Returns cost"""

return (1/(2*m)) * np.sum((hypothesis(X, theta) - Y) ** 2)# initial cost without trraining model

cost(X, Y, theta)3154.8870744571304We have now defined a Cost function which tells us how wrong we are from actual label(target). The less the cost - the better. Right now with just random value of theta we have attained a very gigh cost. We need to minimize it. In this blog we are going to use a technique called Gradient Descent for minimization of the cost function.

Gradient Descent / Model Training

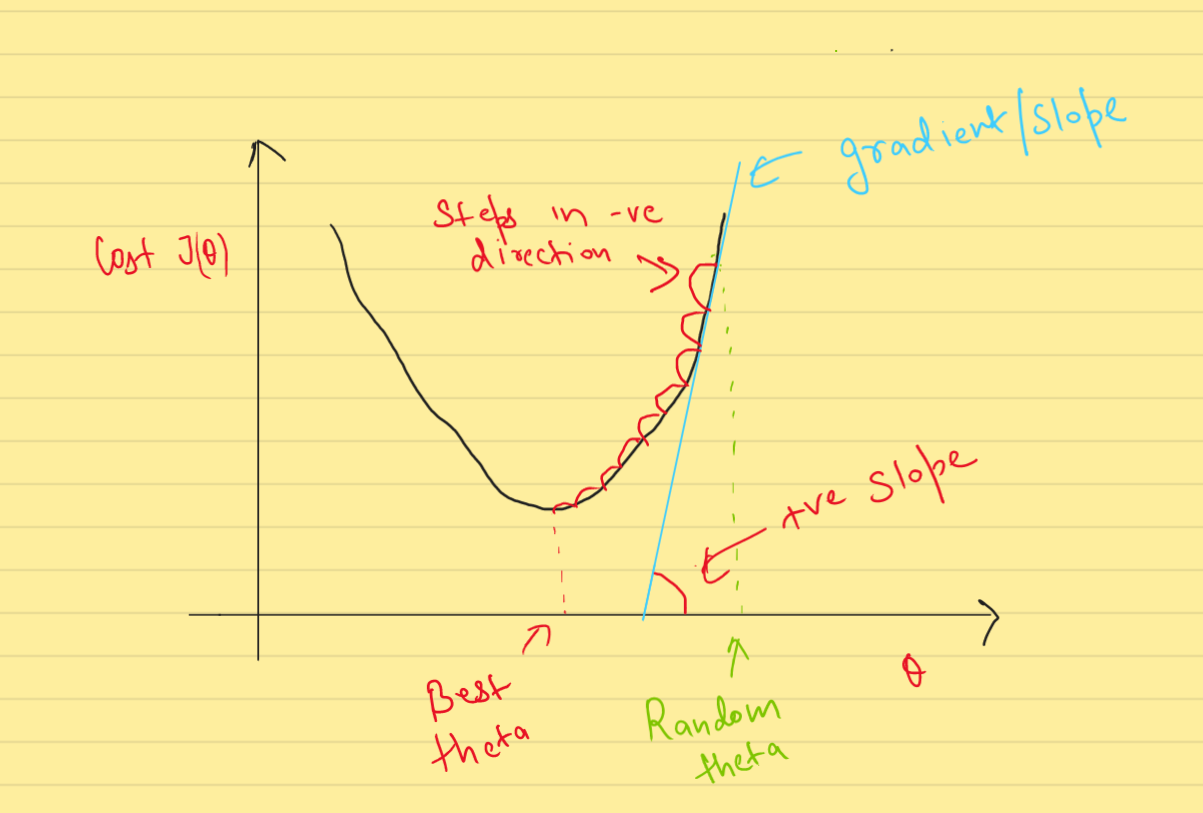

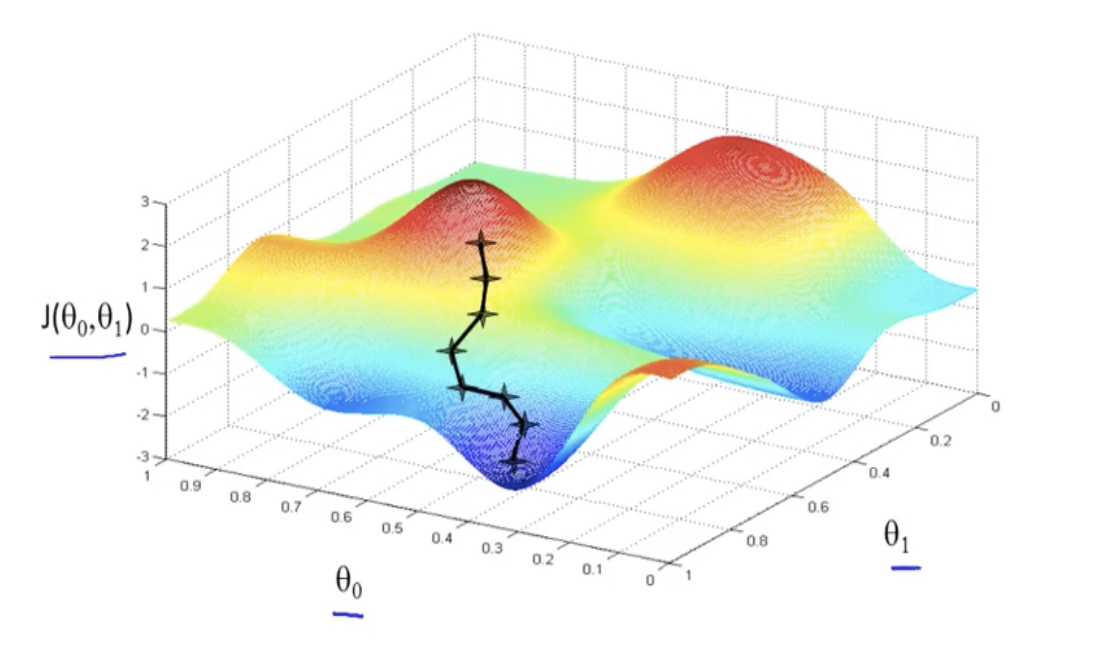

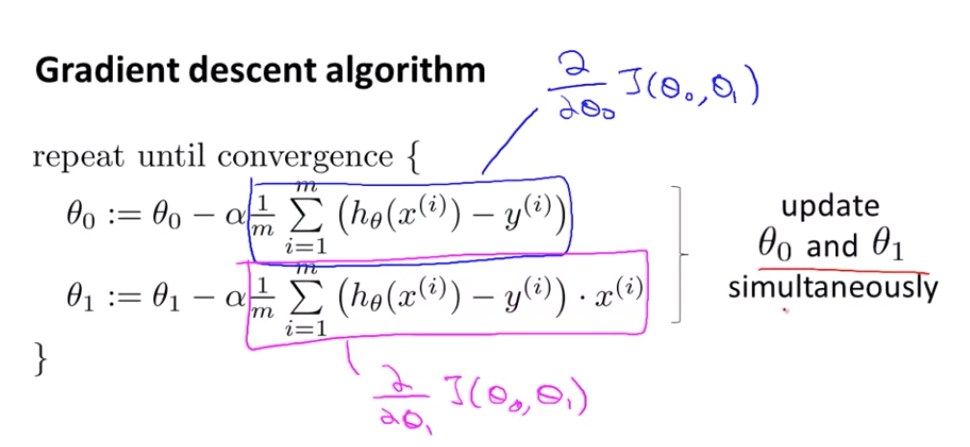

Gradient Descent is the optimization algorithm used to finding the value of paramerter which minimizes the value of cost function. In our case we are minimizing MSE Cost function with the help of this algorithm.

This algorithm works by iteratively updating the values of the parameters(thetas) in the direction of the negative gradient of the cost function with respect to the parameters. This means that at each iteration, the parameters are updated in the direction that reduces the value of the cost function.

The learning rate(denoted by alpha, \(\alpha\)) is the one of the hyperparameter(parameter, for which the value needs to decided manually). We need to set learning rate very carefully, It decides the step size in the negative direction of the gradient(slope).

A higher learning rate can lead to faster convergence, but can also make the algorithm more likely to overshoot the minimum. A lower learning rate can be more stable, but can also lead to slower convergence.

To make a analogy, We can think Gradient descent algorithm like - We are blindfolded and descesnding from a hill(cost function). We will take a step forward evaulate the slope(gradient) and then move according to that. When we will reach bottom of the hill, then the step in any direction will take us upward and thus we know that be have reaced bottom(convergence).

# minimize cost through gradient descent - Model training

def gradient_descent(X, Y, theta, alpha, steps):

for i in range(steps):

old_cost = cost(X, Y, theta)

grad0 = ((1/m) * np.sum(hypothesis(X, theta) - Y))

grad1 = ((1/m) * np.dot((hypothesis(X, theta) - Y), X))

temp0 = theta[0] - alpha * grad0

temp1 = theta[1] - alpha * grad1

theta[0] = temp0

theta[1] = temp1

new_cost = cost(X, Y, theta)

if i%10 == 0: #print every 10th epoch

if new_cost > old_cost:

print("WARNING!!! COST INCREASING")

else:

print("Cost Decresing", new_cost)# train model of 150 iterations

gradient_descent(X, Y, theta, alpha=0.0001, steps=200)

thetaCost Decresing 2980.5693134787443

Cost Decresing 1689.4327589238676

Cost Decresing 959.3382749612664

Cost Decresing 546.4942831760693

Cost Decresing 313.0448146583842

Cost Decresing 181.03693810660252

Cost Decresing 106.39088282551906

Cost Decresing 64.18101546051345

Cost Decresing 40.31272552858337

Cost Decresing 26.815983067351162

Cost Decresing 19.184003183555753

Cost Decresing 14.868350028147061

Cost Decresing 12.427968518501714

Cost Decresing 11.047990290588316

Cost Decresing 10.267634588205215

Cost Decresing 9.826345333024406

Cost Decresing 9.576786793160268

Cost Decresing 9.435645548985352

Cost Decresing 9.35581065326909

Cost Decresing 9.310642443891272array([[0.72065763],

[4.73296698]])After training we can clearly see we have reduced cost, and the cost has converged at a a fixed place, training it furteher will not lead lower cost, thus we can stop the furter interations. We have now found those parameters which gave us minimized cost.

# cost after traing model

cost(X, Y, theta)9.287030172925407# value of theta after training

thetaarray([[0.72065763],

[4.73296698]])# regression line after traing model

draw_points_and_lines(X, Y, "chirps/sec for the striped ground cricket", "temperature in degrees Fahrenheit", theta)

We can clearly see we have fitted line to the points. Thus we have successfully used linear regression to train a model.

Prediction/Inference

Now that we have found the appropriate line of fit through thsese points. We can also use this line to infer values on input values(chirps/sec) which are not present in the training data.

# Temprature when 19 chirps/sec

x = np.array([19])

print(f"Predicted temprature when ground cricket chips 19 times a sec is {hypothesis(x, theta)[0]} degrees Farenheight.")Predicted temprature when ground cricket chips 19 times a sec is 90.64703016147293 degrees Farenheight.